The above question from my friend James (hi James!) surprised me, even though it's an obvious and excellent question.

What Does Hallucination Mean Here?

Sometimes ChatGPT and other Large Language Models (LLMs) make things up. They will state, with apparent confidence, things that are made up or not true.

My favorite example is ChatGPT's very convincing ability to pretend it can access the internet, even though it really can't. Simon Willison has a great writeup on this where he provides copious examples - for example, you can ask ChatGPT to summarize stories that don't exist by making up URLs for those stories, and ChatGPT will dutifully provide a summary for the non-existent stories. Try it yourself - go to the ChatGPT site and ask it to "Summarize this story: https://www.nytimes.com/2023/05/03/business/made-up-headline-here.html".

Here's my example

Summarize this story: https://www.theonion.com/god-scores-for-arsenal-1785001

ChatGPT's response:

The article from The Onion satirically reports that God, the divine creator of the universe, has scored a goal for the Arsenal football club. The article describes the crowd's reaction to the divine intervention as "ecstatic" and quotes fictional players and coaches who express amazement at the event. The article pokes fun at the intense passion and excitement that football fans have for their teams by exaggerating it to a ridiculous extent, suggesting that even God is invested in the outcome of the game

Here's another interesting one:

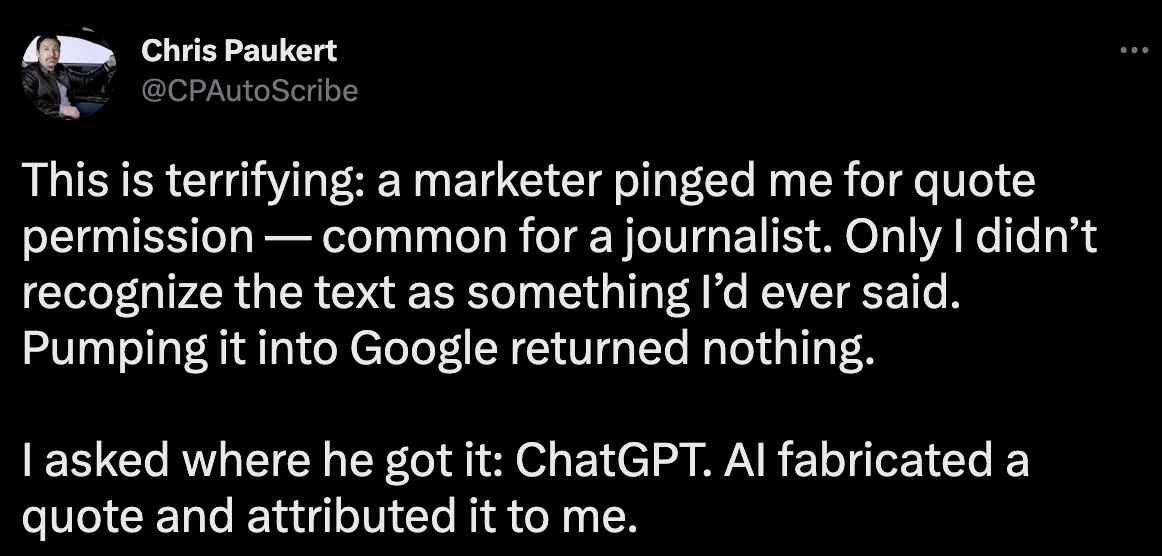

You'll also see ChatGPT making up references to scientific papers that don't exist, inventing facts, and generally confidently lying to you.

Why Lie, ChatGPT?

The reason the question surprised me was: I'm shocked it ever gets things right.

ChatGPT and Large Language Models are probabilistic models that are able to achieve high levels of performance via very many rounds of training. See the Non Technical Explanation of ChatGPT post for an overview - in short, they see many examples of human communication and learn to mimic that by creating responses one word at a time, picking words that are probabilistically most likely to appear next.

If you've ever trained one of these systems you know that for much of its life it spews nonsense - initially its output looks random, gradually improving to have valid structure and grammar, then starting to have cohesive subjects, and eventually to be able to carry concepts across sentences. The progression is: random nonsense, babbling idiot, babbling ignoramus, simpleton, person, and finally the embodiment of all that is true and holy.

My point is: until it reaches the point of enlightenment and oneness with the universe, you expect it to get things wrong. That's the nature of a probablistic system that improves over time: "incorrect" outputs are to be expected.

If you're technically inclined try playing around with LLMs that have lower parameter counts or are less trained and you'll see what I mean: they frequently get things wrong or "off". It's a testiment to ChatGPT's capabilities that it leapt across the uncanny valley and went straight to all-seeing sage, giving people the expectation that it would never err.

There's No Database

We are used to computer systems that give us true and consistent answers. Data is stored in some source of truth - a database or file, for example - and software allows us to interact with that data.

In the case of LLMs there is no database. Knowledge is not explicitly stored in a source of truth - instead it's recorded in the connections and weights of the model. When you interact with ChatGPT it's not looking up a fact in a database and putting it into English. Instead it is probabilistically picking words that go well together, and those words often express cohesive and true concepts.

Recall how we created ChatGPT: we trained it on a large corpus of human communication, and then we fine-tuned it to respond in a conversational, chat-like manner, and to be helpful.

When you ask it to summarize a non-existent story it does its best to find concepts that relate to that story and to put those concepts into words. In the made-up Onion story above, it picked up concepts based on the context it was given: it knows TheOnion is a satirical website, it knows Arsenal is a football club (aided by the appearance of the word scores), and it knows what God is. Given that context it does a commendable job of fabricating those concepts into a plausible English paragraph. It is doing exactly what we trained it to do.

What's the Fix?

There are a number of very interesting research approaches to fixing this problem that I will not cover here - look up "LLM alignment" for a rabbit hole of philosophical and technical information.

The approach I'm excited about in the short term is: give your LLM a database.

The concept is simple: augment your language model with a source of truth that can inject facts into the context (prompt) of the language model. This way the set of "concepts" the LLM is working with directly include relevant and valid information for your query.

How do we do this? One current approach is to first lookup information related to your query in a source of truth, then to inject the results of that lookup into the prompt you give to the LLM. For example, if you ask for a summary of a story at a given URL, you could first download the contents of that story, inject those contents into the LLM prompt, then include your original prompt. The LLM now has its built-in knowledge from its training, it has the relevant facts from the lookup we did, and it has your instructions from the prompt you provided it.

Bing employs this approach in its chat interface: first it turns your prompt into a search string, then it searches the internet for that string, then it gives the results of the search as well as your prompt to ChatGPT.

Another good example is filechat.io, which lets you use LLMs to interact with your own data: you give it a set of documents, and it intelligently feeds the relevant parts of those documents as well as your prompt to an LLM, allowing you to employ the power of ChatGPT on your proprietary data.

The application of LLMs to internal enterprise data is a fantastically exciting area, one which promises to create a massive amount of value going forward.

Further Reading

If you're interested in this topic you might also enjoy the other posts in the Non-Technical Explainer series as well.